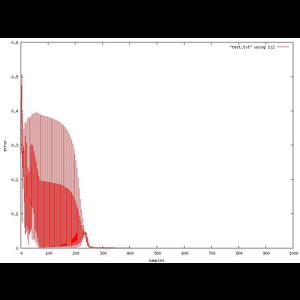

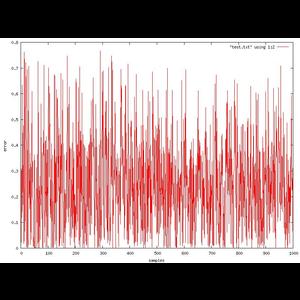

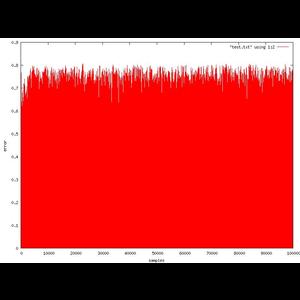

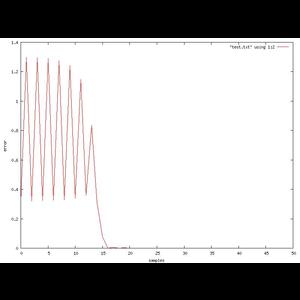

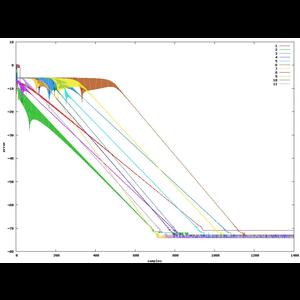

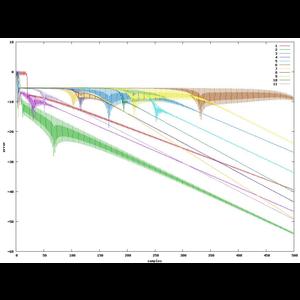

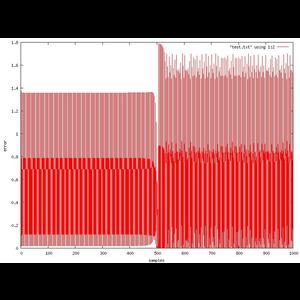

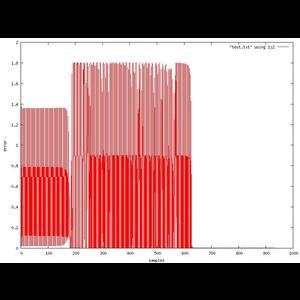

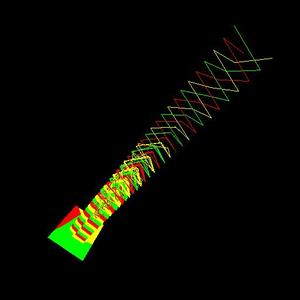

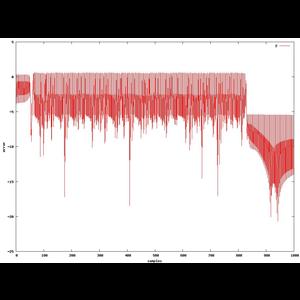

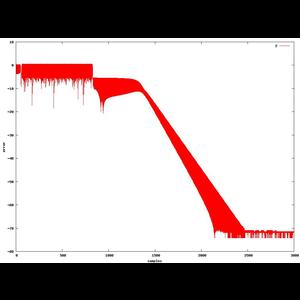

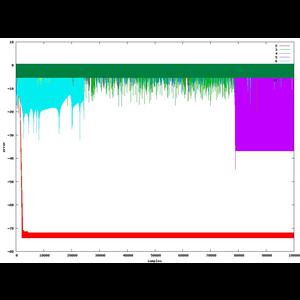

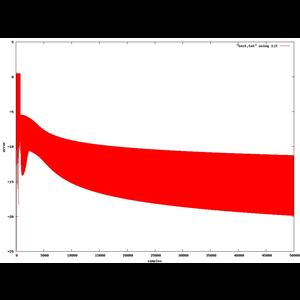

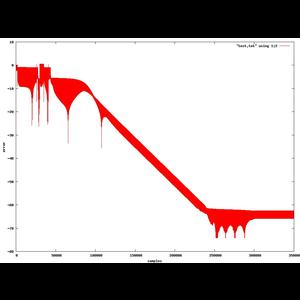

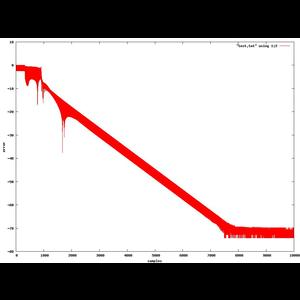

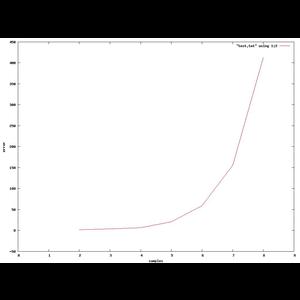

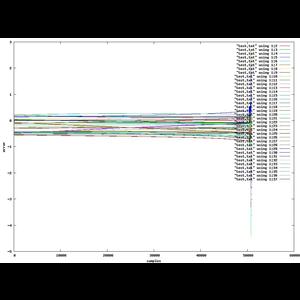

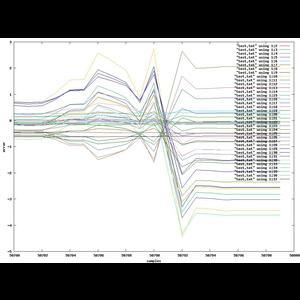

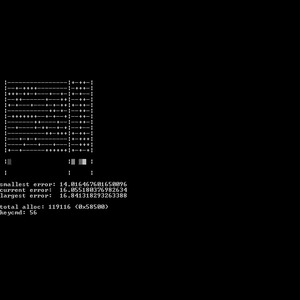

A recurrent neural network that solves some bitwise problems, like addition and multiplication. Its fun to watch the error converge for each binary digit.

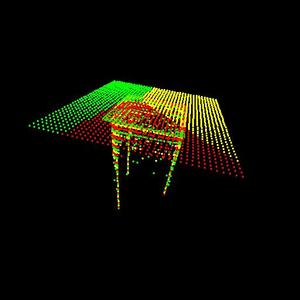

The idea came from analyzing Backpropagation Networks and Hopfield networks, and concluding that the two could be combined to simulate short-term memory as stable states between the input and output formulas. The eigenvectors of the recurrent function. I did some researching and soon found out the tactic was already published as a 'Recurrent Neural Network.' I also later learned that RNN's competed incredibly poorly with the (more modern) Long-Short-Term Memory (LSTM) network models on certain tasks. I have not yet implemented a LSTM, though I'd really like to. In other tasks the RNN still pulls ahead.

Naturally while contemplating RNN's I was also hard at work contemplating the operation of our own minds. I came up with a few conclusions in the process: An incredible majority of the reactions, opinions, observations, and perception in general which we form through the day - assumptions we make and things we assume are - are all in our head. Byproducts of formulated memories we have sticking around in the back of our minds. You guess your friend wore that shirt for this reason when they in fact did so for something completely different. Not to dis the concept of the soul - a very true object in reality (though not the physical, as I was told Time magazine spent extensive work discovering and pointing out) - for coincidences observed in my own life, as well as others, has convinced me that certain things operate on a deeper level than the physical.

Another concept this made me conclude (and stand behind for a very short time) was that memories were stored not as single neurons or entities within our mind, but rather as eigenstates of electrical impulses over the entire system of our brain. A popular and common held believe, so I've heard, amongst the nihilist scientific community. One that - gratefully - was debunked by a find I remember Discover magazine reporting on only within this year: that they now learned our brains' form individual neurons for each objective concept which we perceive. The fact that the LSTM model - which maintains recurrent signals per-neuron rather than as eigenstates of the entire system - outperforms the RNN model at the task of maintaining stable memory states does prove this in the simulation realm. What does this mean? That there's more to objective thought and objective truth than we gave Plato credit for. How does our mind know what to delegate neurons towards?

For kicks I made the code compatible with DJGPP and MSVC as well. I did the whole preprocessor dance between the two compilers.

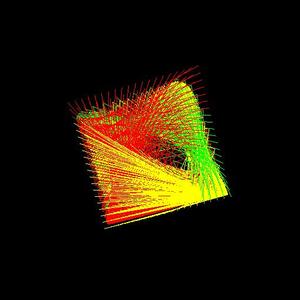

Included is some classes for rank-1, 2, and 3 tensors. The math that makes use of them was fun working out, and took me a few days' worth of free time.

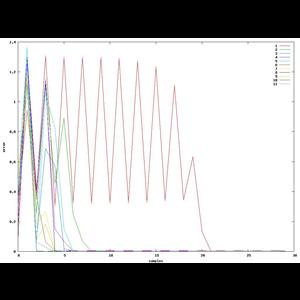

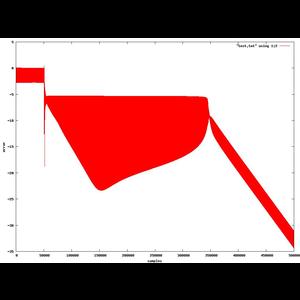

Hey kids, you can develop your own Recurrent Neural Network at home! Here's how it works: First, start with your linear system. Wrap it in a transfer function (hyperbolic tangent has been good to me). Calculate an error by the sum of the difference between your desired and actual output. Then use the derivative of the error with respect to each of the weights to find which way changing each weight will result in more error. Adjust the weights in the other direction and they will converge on your desired signals.